Although next-generation identity checking software promises to provide safer and more secure verifications, new threats are constantly being introduced. Deepfakes – e.g. AI-generated images of user – can fool these systems. In fact, recent reports have shown how AI-generated images can trick 3D liveness detectors to commit deepfake identity theft.

How does identity theft work with deepfakes?

In some tests, deepfakes are used to replicate the target faces or “spoof” liveness detectors, e.g. make it seem like a person is in a room taking selfie, who’s not actually there. The problem is prevalent in the industry, with many top identity verification tools getting fooled in some tests.

Ultimately, to protect against deepfake fraud, AI verification and validation systems must include advanced algorithms that can spot AI created images, as well as next-generation facial recognition that can identity fine details and better recognize spoofing.

What Is Deepfake Identity Theft?

Deepfake identity theft refers to using AI-generated images to spoof someone’s likeness. This spoofing is used typically to gain access to personal data or make fraudulent purchases.

AI systems can know quickly create lifelike images of people. These images can be used in videos or to trick identity checker apps. Ultimately, deepfake identity theft is difficult to detect because of these factors:

- Realism: AI-generated images often display a high level of realism, making it tough to distinguish them from genuine images. Advanced tools like DALL-E can generate intricate textures, lighting effects, and even simulate human-like flaws.

- Fast-Paced Progress: AI is advancing quickly. Newer and more complex methods for creating realistic images are constantly emerging. Staying ahead of these developments demands continuous innovation and vigilance.

How do these deepfakes trick ID scanners?

Essentially, a computer software looks for facial similarities between faces and connects them to real people. The trick takes advantage of how easily people are led to trust what they initially see. Most human eyes won’t ever detect the almost seamless video.

Learn More. See our guide to Identity Theft vs Identity Fraud for key differences and ways you can protect yourself.

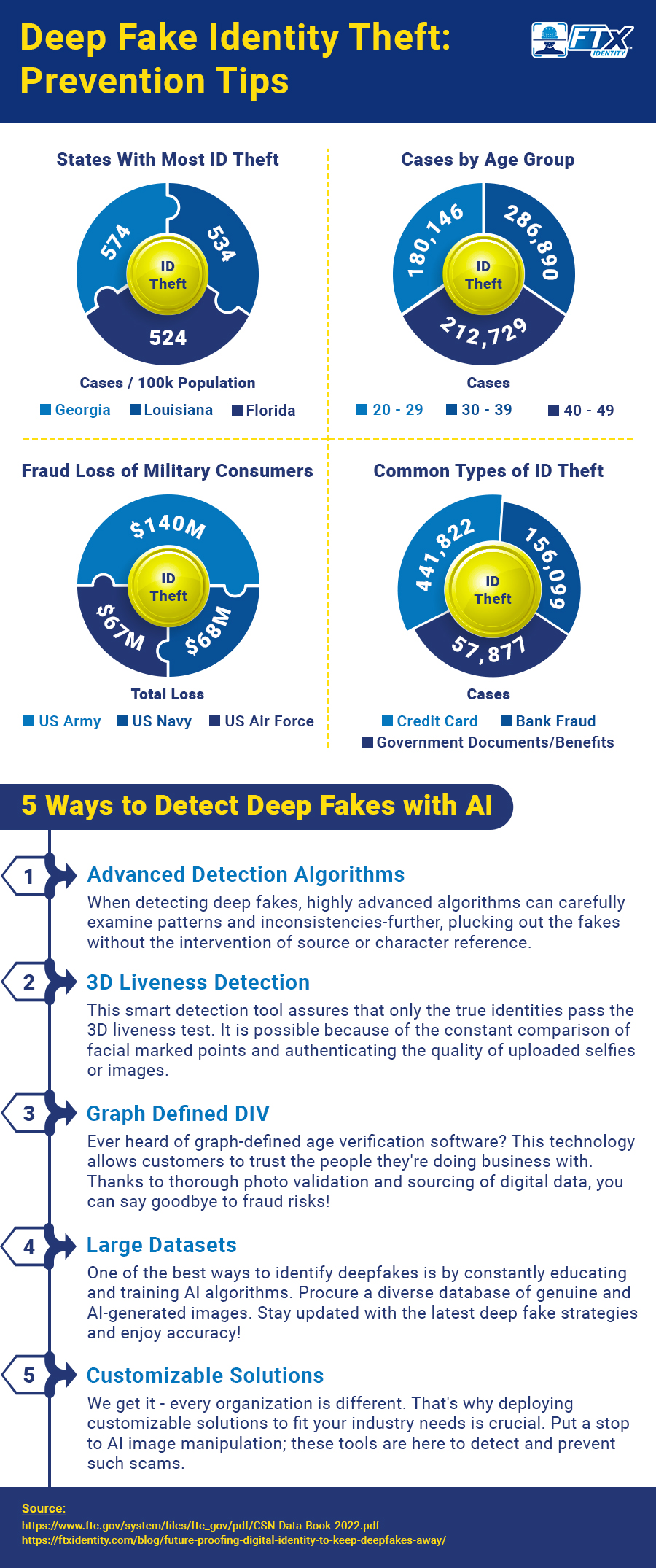

Infographic: Deepfake: Tips for How to Prevent Fraud

How Can You Detect Deepfakes?

While businesses may effectively protect themselves from deepfake fraud and lessen the impact of future identity-based attacks, current techniques of fraud detection cannot verify 100% of true identities online. In order to identify third-party fraud, spot a fake id, and impersonation efforts, financial institutions and fintech businesses must exercise extra caution while onboarding new customers. Businesses can correctly identify deepfakes and stop more fraud with the right technology.

Detecting deepfake identity theft isn’t an easy task. As AI image generation tools evolve, the ability to detect the images changes. Algorithms must be trained to spot tiny inconsistencies in these images. Here’s a look at how AI verification works:

1. Advanced Detection Algorithms: Highly precise algorithms must be developed to scrutinize visual and contextual cues. These algorithms can identify subtle artifacts, inconsistencies, and patterns that signal AI manipulation, even in the absence of reference data or ground truth.

2. 3D Liveness Detection: Businesses must confirm identification with deep 3D liveness tests, which estimate liveness by evaluating the quality of selfies and estimating depth cues for face authentication, in addition to checking PII during the onboarding process. In many instances, fraudsters may try to pass themselves off as individuals by using real PII in conjunction with a headshot that doesn’t reflect the person’s true identity.

3. Graph Defined DIV – Graph-defined age verification software can help with this. Customers are given trust in the identities of the people with whom they are transacting business, and their risk of fraud is decreased by continuously sourcing digital data during the photo validation process.

4. Large Datasets: To best train algorithms, a large and diverse dataset of both authentic and AI-generated images is needed. This extensive training data allows the algorithms to learn and adapt to the latest trends in AI image generation, ensuring superior detection accuracy.

5. Customizable Solutions: Recognizing that each organization has unique needs and requirements, customizable solutions are offered that can be tailored to specific industries. This ensures effective detection and prevention of AI-generated image manipulation.

The accessibility of deepfake software has made it more difficult to stop digital fraud and deepfake identity theft. Everyone has simple access to phone apps and computer software that can create fake information, from common consumers with no technical skills to state-sponsored actors. The technology is a particularly pernicious fraud vector since it is getting harder for both people and fraud detection tools to discern between real video or audio and deepfakes.

Detecting this fraud requires the latest identity verification methods.

Deepfake Fraud Risks on the Rise

Deepfake technology is used by fraudsters to repeatedly commit identity theft and fraud, causing havoc in a variety of businesses. Numerous sectors are susceptible to deepfakes, but those that deal with significant volumes of personally identifiable information (PII) and client assets are particularly at risk.

Financial institutions and fintech businesses are vulnerable to a variety of identity frauds since they handle consumer data when onboarding new clients and opening new accounts. Deepfakes are a tool that fraudsters can use to attack these companies, resulting in identity theft, bogus claims, and new account fraud. Successful fraud attempts may be used to create a large number of false identities, enabling crooks to launder money or take over financial accounts. Deepfakes identity theft has the potential to seriously harm businesses through monetary loss, harm to their reputations, and poorer customer experiences.

- Loss of money: Early in the year 2020, a bank manager in Hong Kong got a call claiming to be from a client asking for permission to transfer funds for an impending transaction. Bad actors defrauded the bank of $35 million using speech-generated AI software that mimicked the client’s voice. Once transferred, the money couldn’t be traced.

- Management of reputation: Deepfake misinformation damages a company’s reputation in ways that are difficult to fix. Successful fraud attempts that cause monetary loss may have a detrimental effect on customers’ trust in and perception of a business generally, making it challenging for businesses to defend their position.

- Effect on the customer experience: Businesses were pushed by the pandemic to stop sophisticated fraud efforts while maintaining positive customer experiences. Customers will have unpleasant experiences at almost every stage of the customer journey if companies fail to rise to the challenge and become fraud-ridden. To identify and protect against deepfake fraud efforts from the start, businesses must add new layers of defense to their onboarding procedures.

Conclusion

Even though it can be challenging to avoid all forms of fraud, security teams can halt deepfake identity theft in its tracks by moving past outdated methods and using identity verification procedures with predictive AI/ML analytics to reliably spot fraud and foster online trust.

With FTx Identity, your customers can have peace of mind that their accounts are protected from fraud and their data is secure. Our innovative identity verification software prevents the sale of age-restricted products to minors while integrating seamlessly with desktop, mobile, and web applications for an effortless user experience. Customers can benefit from an easy self-signup and online ID verification so they can buy age-restricted products with ease while protecting their personal information, and retailers can stay compliant with regulations and sell age-restricted products with ease without the fear of losing their license.